Understand types of caches and policies to better build your own system

Cache systems demand a delicate balance for applications, as they have many pros and cons. When done right, caching can be performant, increase application speed and decrease costs. However, the integration of cache systems poses the risk of application reliability downgrade.

Engineers need to be well educated in the approaches that could be taken when designing a cache system. Part of the cache system relies on the appropriate selection of caching policies and implementation approaches. The choices an engineer makes should fit the needs of the application behavior.

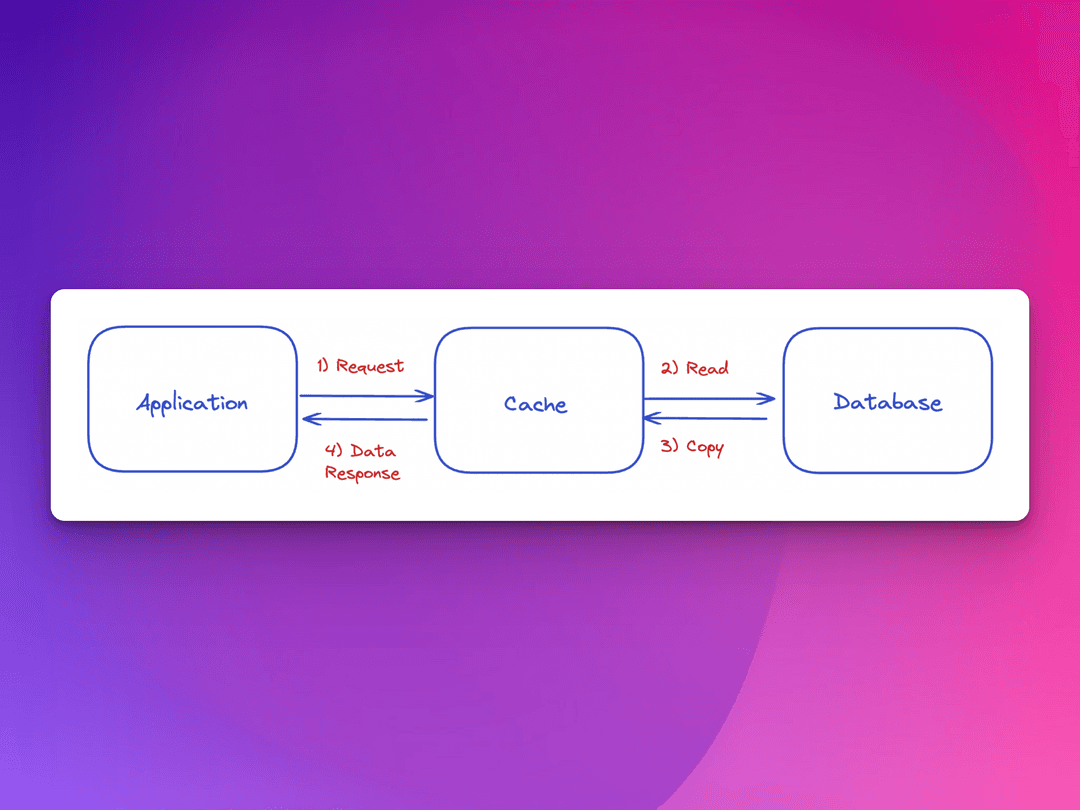

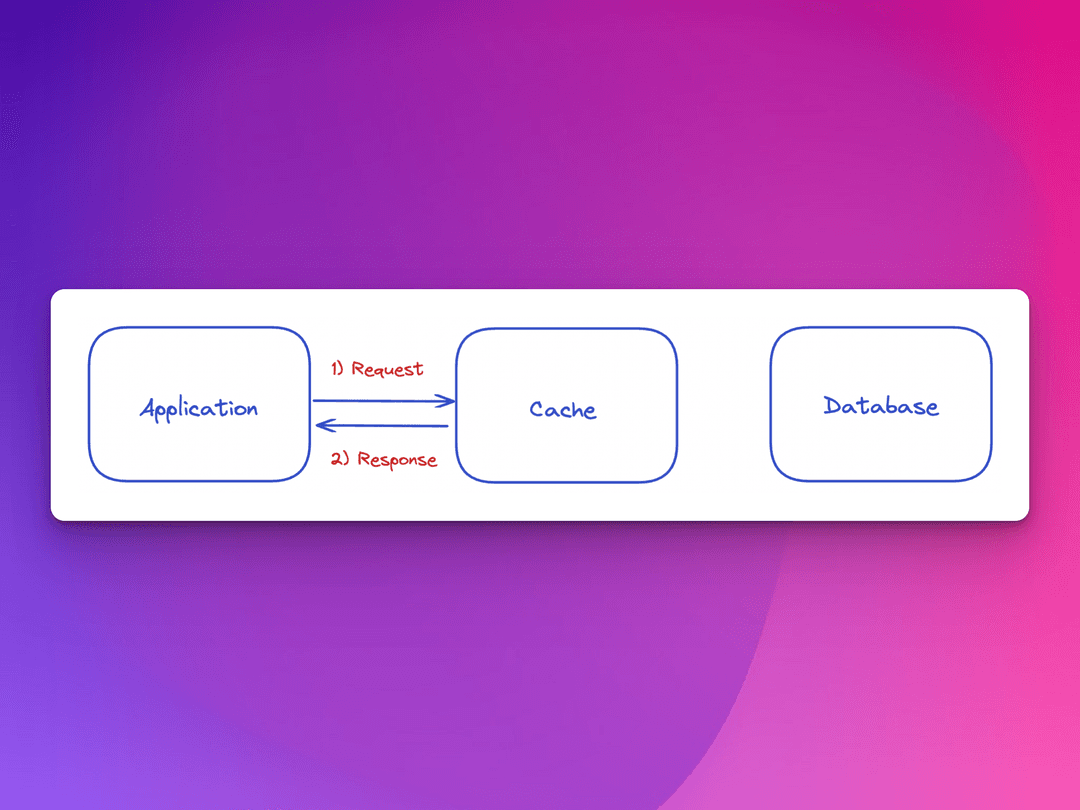

A cache typically sits in between applications and databases. Its sole purpose is to provide with lightning speed the responses applications request. For the cache to do its job correctly, it needs clearly defined rulesets for how it will obtain the data necessary to remain relevant. With that said, there are varying types of caching systems governed by carefully selected cache policies, pertaining to data reads and writes.

Cache read and write policies define when the cache and main memory should be updated to maintain the relevance and reliability of the data when it comes to applications.

The event for which the system or application makes a request to retrieve data from a cache, but that specific data is not currently in the cache memory is known as a cache miss.

A cache hit is when the system or application makes a request to retrieve data from a cache and the data is in fact present and served to the application.

What the cache strives for, are more cache hits that contain accurate representations of the data in the main memory. This is where the read-and-write policies largely come into play.

The read and write policy the cache system follows largely relates to the frequency of cache misses and cache hits.

Cache Read Policy

Cache read policies depict how the cache is to behave on data retrieval attempts from the application. Should the cache be referenced and the data is not present, then the main memory takes on the responsibility of serving the information needed. This is an occurrence we want to minimize, especially if our application is read-heavy.Cache Write Policy

The way in which the cache gets updated when data changes are goverened by the cache write policy.

There are three primary cache write policies.

- write-through

- write-back

- write-around

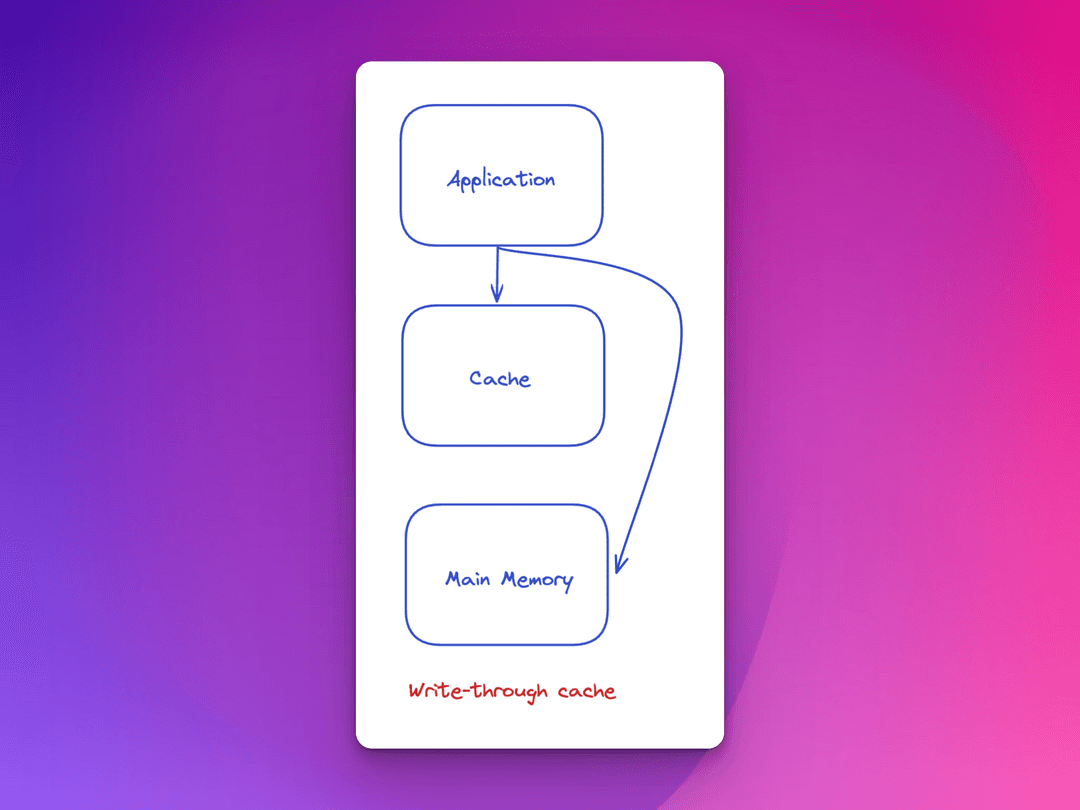

Write-through cache writing policy

Write-through is a cache writing policy in which data is written into the cache and the corresponding main memory location simultaneously.

Advantages of write-through

- Simple

- Reliable

- Aids with data recovery

- Resolves inconsistency problem

The write-through policy is simple and reliable as our data is in constant sync with the main memory location. Any writes to the data will result in an adjustment to the cache, thereby eliminating the risk of a dated cache. In the circumstance of power outages or system failures, we have the cache to aid with the recovery of our data as well. The cache can serve as a backup.

Disadvantages of write-through

- High traffic of writes = worsened performance

- Increased latency

A write-through policy puts into question the advantage of having a cache for write operations. Why? The point of a cache is to avoid going to the main memory, and this policy goes to the main memory one-to-one when it comes to writes.

In the circumstance of high traffic, writing to two sources is not performant, and is vulnerable to latency. Therefore, the write-through cache policy is appropriate for applications that do not have a high write volume.

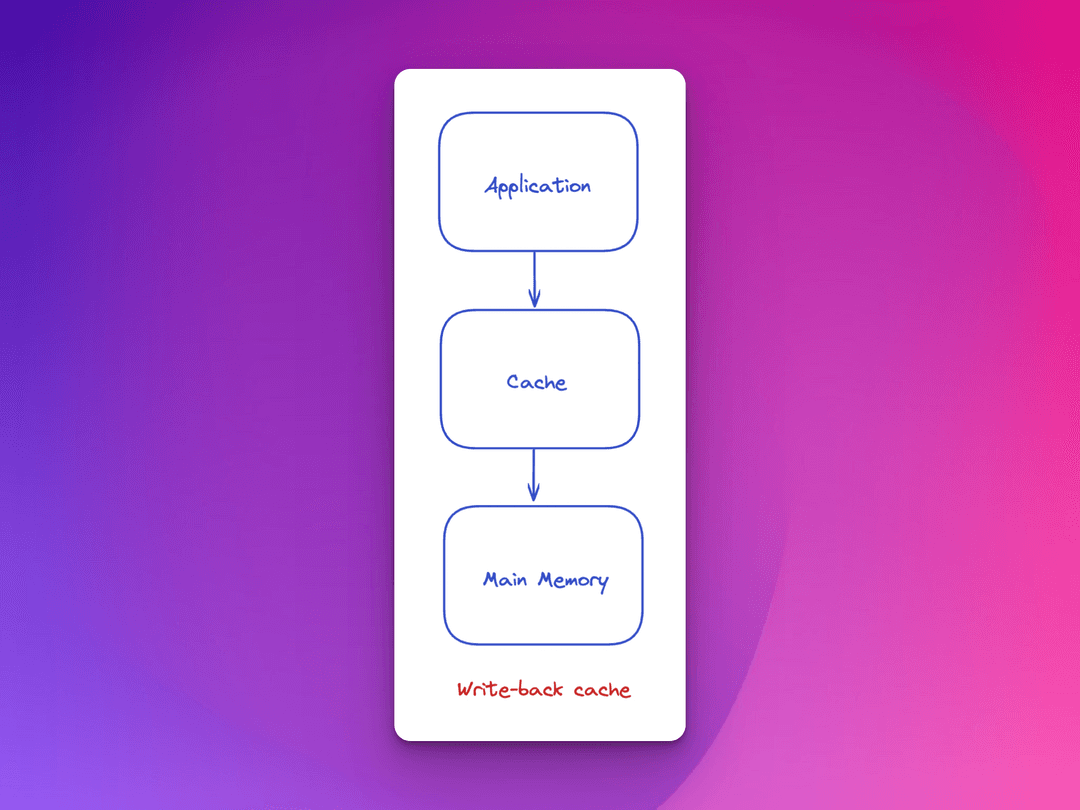

Write-back cache writing policy

Write-back is a cache writing policy in which data is written only to the cache. The data gets written in the background into the corresponding location in the main memory at specified intervals or under specific conditions. The completion confirmation for the write operation remains unblocked when it comes to writes to the backing store or main memory, as this process happens in the background.

Advantages of write-back

- Low latency

- High throughput for write-intensive applications

Writing to the cache is much faster than deferring writes to memory. Therefore the write-back solution can manage many more write requests and complete the requests with low latency.

Disadvantage of write-back

- Risk of data availability/data loss

- Complex

Should the cache fail, we run the risk of suffering from data loss in the event that the data had not been written yet in the background to the backing store.

A write-back cache is more complex to implement than a cache using the write-through policy, as the cache needs to track which data is present solely in the cache but not yet in the main memory. Any updated data not yet reflected in the cache typically gets marked as dirty to indicate the need for a write to the backing store. The data in these locations get written back to the backing store when they are evicted from the cache. The main memory update on the removal rule is an effect referred to as a lazy write. As a result of this defined rule, a read miss in a write-back cache often results in two memory access: one to write the replaced data from the cache back to the store, and one to retrieve the needed data.

The write-back cache is beneficial when it comes to applications with high write volume.

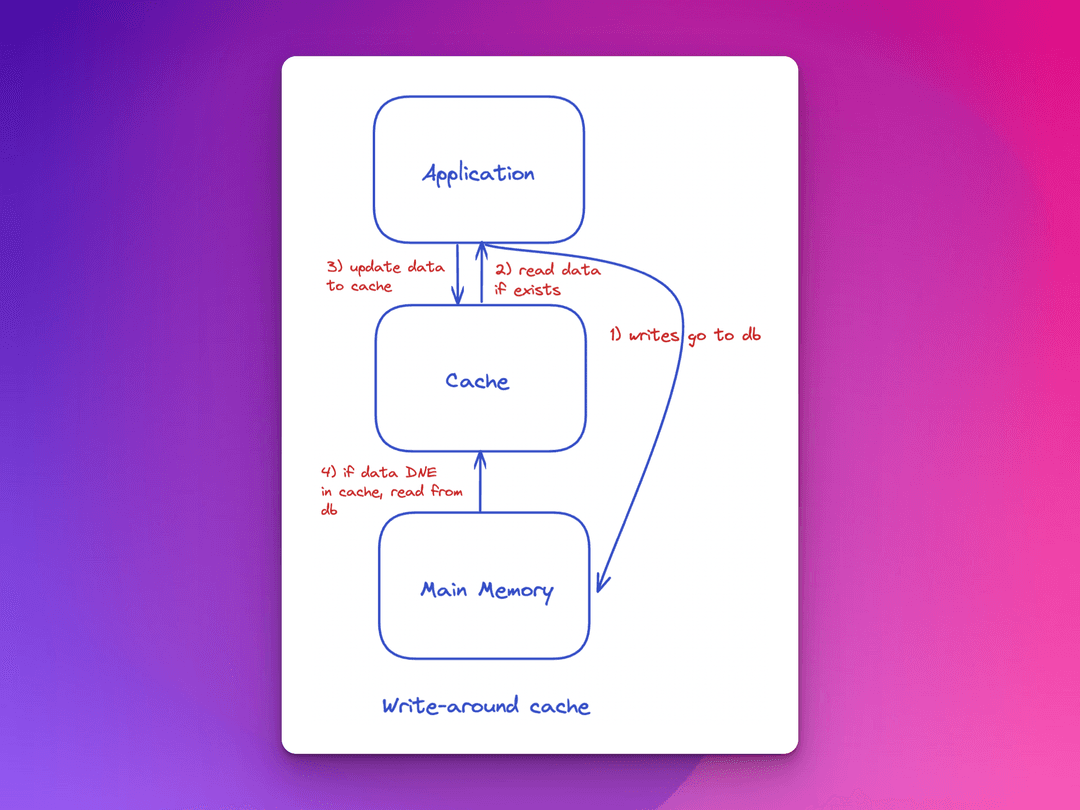

Write-around cache writing policy

The write-around policy has writes go straight to the database first. The cache gets updated after the write to the main memory is complete, or the corresponding key in the cache is deleted to trigger an update the next time a data retrieval from the cache gets attempted.

Advantages of write-around

- Up-to-date database

- Fault tolerance

- Avoidance of cache flooding

Disadvantage of write-around

- Potentially higher read latency

- Potential higher miss rate

The write-around policy works for those applications that write fairly often, and don’t tend to re-read recently written data. The outcome when using this policy results in a cache with values that tend to get read often.

A detail alluded to thus far but not explicitly discussed yet is how we determine when fields in the cache are in need of replacement, given the cache is limited in space.

Cache Replacement Policies

A cache replacement policy or cache eviction algorithm governs the circumstances under which data is subject to being overwritten. The cache eviction policy is necessary because caches tend to be substantially smaller than the main memory to stay cost-effective and efficient.

There are several popular methodologies for determining when to replace data stored in a cache system.

Some cache replacement policies

- First in first out (FIFO)

- Last in first out (LIFO)

- Least recently used (LRU cache)

- Least frequently used (LFU cache)

- Most recently used (MRU cache)

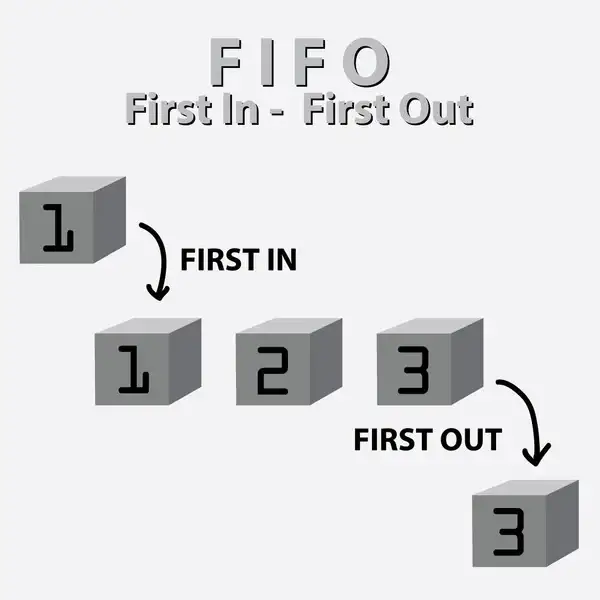

First in First Out (FIFO)

If one is familiar with Queues, the FIFO cache policy will envelop the same behavior.

In a First in First Out or FIFO replacement policy, any item that first got added to the cache is also the first item to be evicted when there is not enough remaining space. The cache evicts blocks according to the order in which they were added, without regard to how often or how many times they were accessed before.

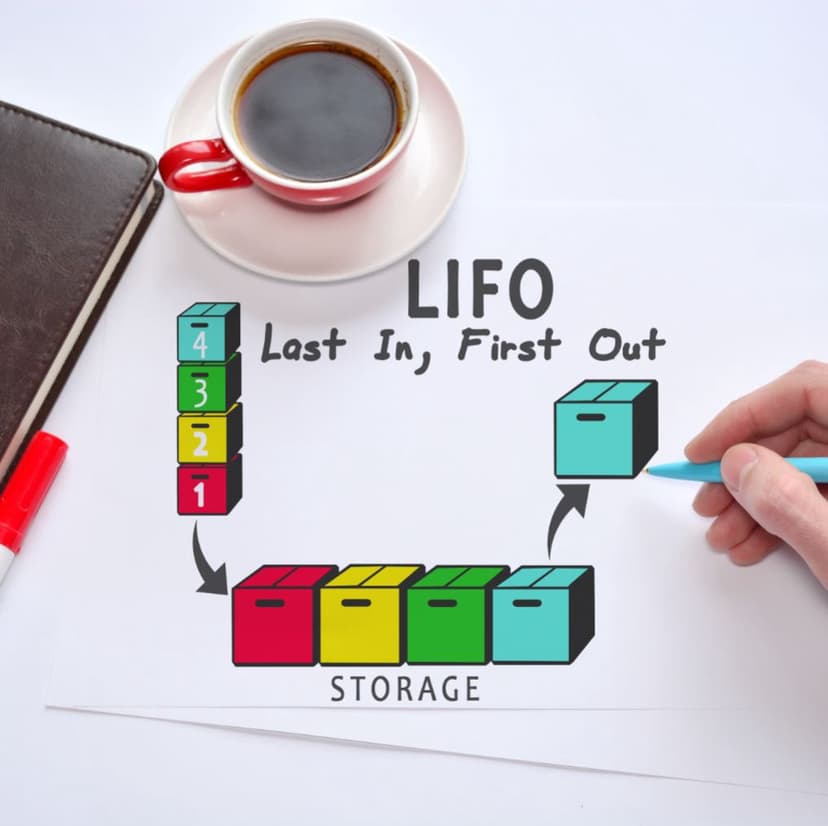

Last in First Out (LIFO)

If one is familiar with the Stack as a data structure, a LIFO or last in first out cache likewise follows the behavior of the Stack.

In a Last in First Out or LIFO replacement policy, any item that last got added to the cache is also the first item to be evicted when there is not enough remaining space.

Least Recently Used (LRU cache)

Now, this style of cache is more interesting and common in a real-world setting. LRU Caches are common interview questions and are highly applicable.

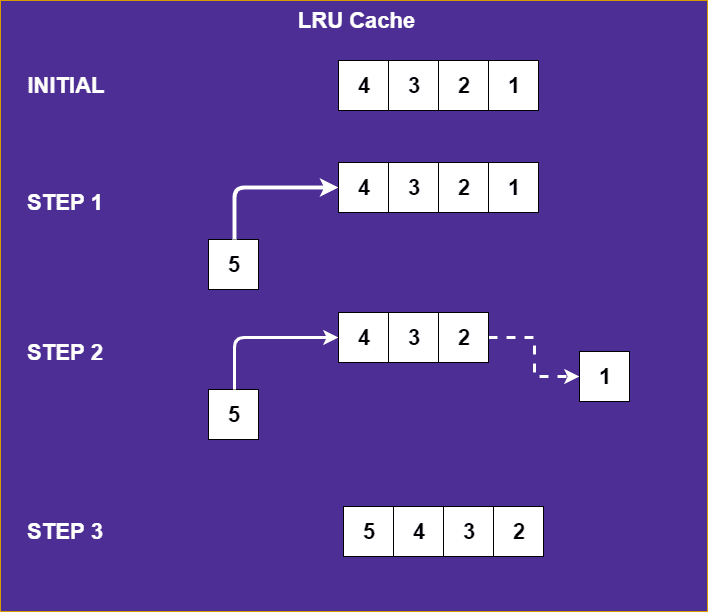

The Least Recently Used or (LRU Cache) keeps track of the items added to the cache, and when they get used. This means that the item that has been retrieved last is the first to get evicted from the cache upon a new entry.

Any item that gets retrieved or added to the cache gets brought to the forefront, to indicate the recency for which it was used. If an item gets added, we can assume by the natural ordering that follows from our addition/retrieval rulesets that the last item in the cache is the least recently used one. Therefore the last item can be evicted from the cache on insertions to a cache that is void of space.

Least Frequently Used (LFU cache)

Though it sounds similar to the least recently used cache, the frequency focuses more on how many times a certain item is referred to from the cache! Instead of keeping tabs on how recently a block was accessed, we store a value depicting how many times it was accessed.

The Least Frequently Used or (LFU Cache) counts how often an item in a cache is needed. Those items in the cache that are used least often are discarded first.

Most Recently Used (MRU cache)

For the Most Recently Used cache or MRU cache, you guessed it, the item that is most recently used is the first to be replaced in a cache!

You might be wondering why we would ever want to evict the most recently used items from a cache.

A most recently used cache or MRU cache applies to situations for which the older an item is, the more likely it is to be accessed. Occasionally, if you have just seen something then it’s unlikely you’ll want to see it again!

For a more literal example of the benefit of this style of cache. Imagine an individual is assessing the quality of recently traded devices. You just examined a phone and determine that it is of higher quality. Would you want to examine the phone again anytime soon? Probably not!

The way the most recently used cache or MRU cache approach works is by keeping a list of the items, for which the item that is most recently viewed becomes the first to be evicted should a new item be inserted.

Summary

Caching systems need to follow rulesets that will determine how effective the cache is at doing its job.

The cache hit ratio is a measurement of how many requests a cache is able to fulfill successfully, in comparison to the number of requests it receives.

Therefore, a cache needs to seek to improve its cache hit ratio.

The cache miss ratio is a measurement of how many requests a cache is unable to fulfill successfully, in comparison to the number of requests it receives.

A cache should seek to minimize the cache miss ratio while ensuring that the data remains relevant.

So, how do we accomplish this?

Caches follow read and write policies, where a few examples of write policies are:

- write-through

- write-back

- write-around

Each type of policy comes with trade-offs that need consideration. Application needs should be taken into consideration, and those are determined by evaluating the behavior of the application.

Ask questions like:

Does the application read data often, or write often?

Or

Does the application have written data become read immediately, or after some time?

Or how about

Does recently read application data get re-read often?

Every application has an applicable read-and-write policy. Each policy accommodates different needs. The disadvantages of each need to be carefully evaluated and monitored.

The next detail to consider is that caches are a small subset when compared to the size of main memory.

Because of the limited size of a cache, the cache also needs to follow a policy that governs when stored data is subject to be replaced with incoming fresh data. This type of policy is better known as the cache replacement policy.

Some cache replacement policies:

- First in first out (FIFO)

- Last in first out (LIFO)

- Least recently used (LRU cache)

- Least frequently used (LFU cache)

- Most recently used (MRU cache)

Remember, developers are creatures that turn coffee into code. So I'd very much appreciate if you bought me a coffee!  I’m a new writer and I will be posting very frequently on my findings and learnings in the tech industry and beyond. Join my newsletter if you would like to stay tuned!

I’m a new writer and I will be posting very frequently on my findings and learnings in the tech industry and beyond. Join my newsletter if you would like to stay tuned!

Thanks for reading again! ❤️

| Understand Open Graph Dynamic Image Meta Tags | 1 |

| Pros and Cons of Caching Data in Software | 2 |

| How to build a Modal in ReactJS (Part One) | 3 |